Something remarkable is happening right now. The software running quietly in data centers around the world is no longer just answering questions – it is booking flights, writing code, running experiments, and making decisions. AI agents have graduated from clever chatbots to something altogether more unsettling: autonomous actors that actually do things in the world.

The question is no longer whether AI can impress us on a test. The real question – the one that keeps researchers, executives, and frankly a lot of ordinary people up at night – is whether these systems are genuinely closing in on human-level performance. The honest answer is complicated, and a little surprising. Let’s dive in.

From Chatbots to Autonomous Agents: What Actually Changed

Not long ago, AI meant a system that responded when you typed something at it. That era feels almost quaint now. The years 2025 and 2026 mark the transition from AI assistants to agentic AI – autonomous systems that don’t just answer questions but actually do things.

A key inflection point came in late 2024, when Anthropic released the Model Context Protocol, which allowed developers to connect large language models to external tools in a standardized way, effectively giving models the ability to act beyond generating text. That was the moment the door swung open.

AI agents differ from traditional AI assistants that need a prompt each time they generate a response. In theory, a user gives an agent a high-level task, and the agent figures out how to complete it. Think of the difference between handing someone a to-do list versus hiring a contractor who plans, sources materials, and builds the whole thing independently.

By mid-2025, agentic browsers began to appear. Tools such as Perplexity’s Comet, OpenAI’s GPT Atlas, and others reframed the browser as an active participant rather than a passive interface – for example, rather than helping you search for vacation details, it plays a part in booking the vacation.

Where AI Agents Are Already Beating Humans

Here’s the thing – in certain narrow areas, AI agents are not just matching humans, they are leaving them behind. Speed is the most obvious advantage. AI agents alone are much faster, taking roughly nine-tenths less time, using far fewer actions, and costing a fraction of what human workflows cost. That is not a marginal edge. That is a different league entirely.

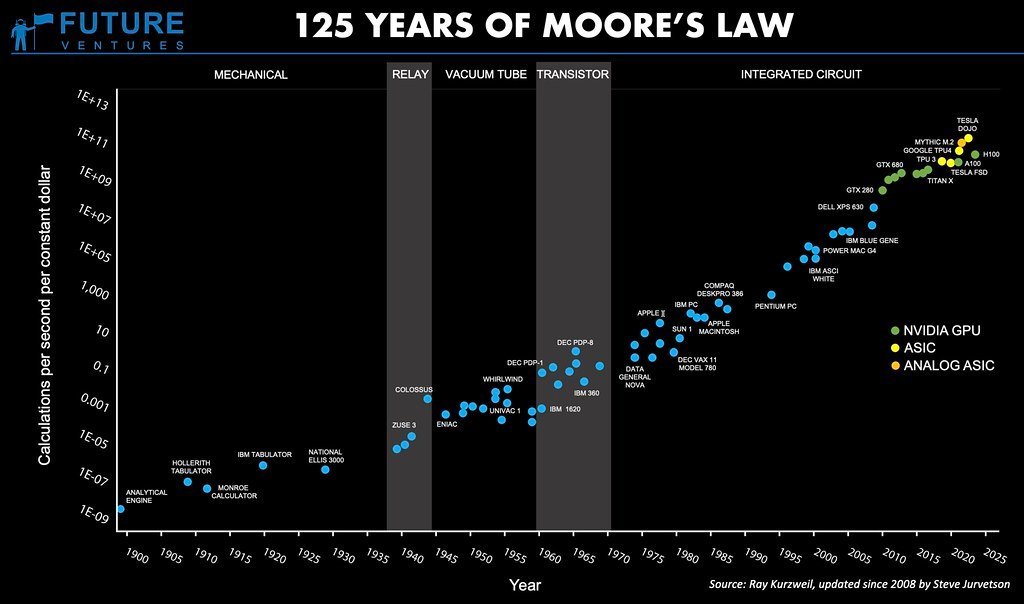

Research indicates that the tasks AI agents can autonomously complete with a fifty percent success rate have been doubling approximately every seven months. This trend suggests that, within five years, AI agents could single-handedly handle many tasks that currently require human effort.

OpenAI’s o3 model achieved an impressive accuracy score on the ARC-AGI benchmark, which tests human-like problem-solving abilities, by generating multiple parallel solutions and using consensus mechanisms to select the most reliable answers – establishing the base capability for autonomous action. In scientific domains, too, the numbers are moving fast. A collaboration with Red Queen Bio showed GPT-5 improving a molecular-cloning procedure’s efficiency by a factor of 79, accelerating wet-lab biotech tasks dramatically.

The Uncomfortable Truth: Where They Still Fall Short

Let’s be real – the hype machine does not always tell you the full story. When agents work solo, success rates drop significantly compared to human-only workflows, largely due to mistakes like hallucinations, misuse of tools, or over-reliance on code-writing for tasks better suited to visual or judgment-based thinking.

The best current models are capable of some tasks that take even expert humans hours, but can only reliably complete tasks of up to a few minutes long. That gap between benchmark glory and real-world reliability is enormous and often gets glossed over in breathless tech coverage.

Without a true understanding of the world in all its nuances and the absence of human-like common sense, AI systems struggle to adapt and reason in all nuances like humans can, or make reliable decisions when faced with the unknown. Creativity, ethical judgment, and contextual wisdom remain firmly human territory – at least for now. Despite advances, AI still struggles with common sense, abstract thinking, and judgment, areas where humans outperform machines.

The Hybrid Model: When Human Plus AI Beats Both

I think the most genuinely exciting finding in recent research is not about AI replacing humans. It is about what happens when the two work together. Hybrid human-AI workflows, where humans handle judgment-heavy or ambiguous tasks and leave structured, programmable work to AI, deliver superior results, boosting overall performance by nearly seven-tenths compared to either working alone. That is a staggering improvement.

Human-AI collaborative teams demonstrated significantly greater productivity than human-only teams, spending far less time on editing while maintaining high quality and similar performance outcomes. The metaphor that comes to mind is a surgeon and a robotic arm. Neither one alone is as precise or as capable as both together.

Full automation often shifts work rather than eliminating it – from execution to verification, correction, and risk management. The most effective deployments pair agents with humans deliberately, a division of labor that plays to the strengths of both and avoids mistaking speed for reliability. That is a nuance worth sitting with.

The Explosive Growth Nobody Is Fully Ready For

Forty percent of enterprise applications will be integrated with task-specific AI agents by 2026, up from less than five percent today, according to Gartner. That is not gradual adoption. That is an avalanche happening in slow motion – and most companies are still figuring out what it means for their workforce.

The global AI agents market is projected to reach $7.6 billion in 2025, up from $5.4 billion in 2024, and is expected to grow at a compound annual rate of nearly forty-six percent through 2030. For context, that kind of growth rate is almost never sustained in any technology sector. Yet the forecasts keep climbing.

Salesforce announced it would not hire software engineers in 2025, citing a thirty percent productivity increase from their AI agent technology. Honest or not, that announcement was a signal. The economics of AI agents are already reshaping hiring decisions at major companies, and this is just the beginning of that wave.

What This Means for the Future of Human Work

When we ask what cognitive tasks humans can do that AI systems cannot, it is harder today to give a clear answer. While older benchmarks challenged specialized AIs to compete with the general population, today it is more common for benchmarks to challenge general-purpose AIs to compete with expert humans on problems that demand expertise and complex reasoning. The finish line keeps moving.

If the measured trend from the past six years continues for two to four more years, generalist autonomous agents will be capable of performing a wide range of week-long tasks. That is not science fiction. That is extrapolation from verified, documented data. Still, extrapolation is not destiny, and there are real limits to how fast these systems can evolve.

Reasoning AI will complement human intelligence, amplifying productivity and enabling breakthroughs in complex problem-solving, with human roles potentially focusing more on strategic oversight, ethics, creativity, and interpersonal relationships. The market will likely place a vastly higher value on intrinsic human skills, which AI can augment but not replicate.

The most honest way to frame it is this: AI agents will not replace what makes humans distinctly human. They will, however, replace humans who refuse to understand what AI agents can do. The window to adapt is open, but it will not stay open forever. What would you rather be – the surgeon or the one who never picked up the robotic arm? That choice, right now, is still yours to make.